bagging predictors. machine learning

A weak learner for creating a pool of N weak predictors. The aggregation v- a erages er v o the ersions v when predicting a umerical n outcome and do es y.

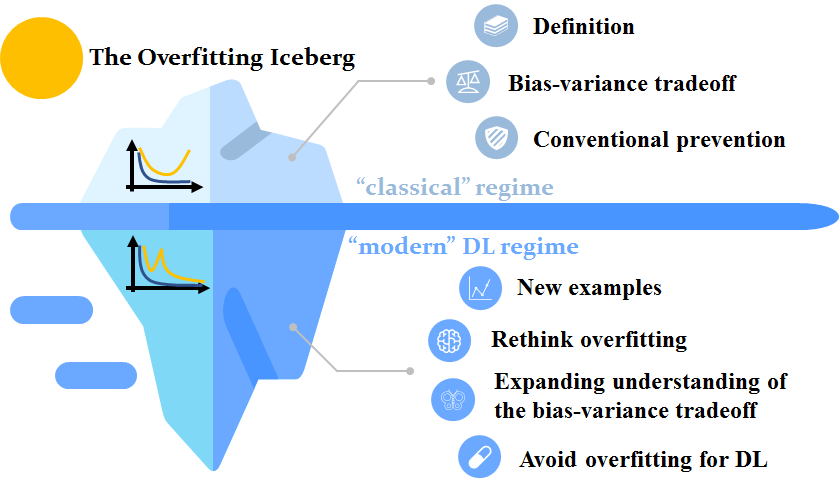

4 The Overfitting Iceberg Machine Learning Blog Ml Cmu Carnegie Mellon University

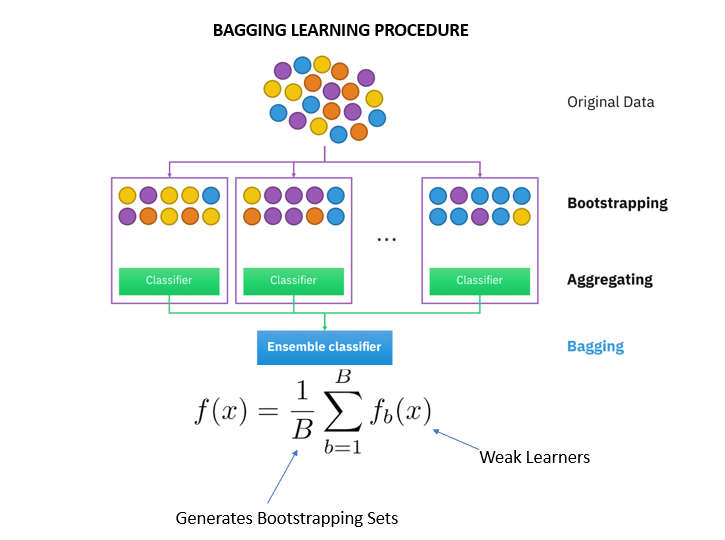

Bagging also known as Bootstrap aggregating is an ensemble learning technique that helps to improve the performance and accuracy of machine learning algorithms.

. 421 September 1994 Partially supported by NSF grant DMS-9212419 Department of Statistics University of California Berkeley California 94720. The technique which we use is - we make use of. The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class.

In this post you will discover the Bagging ensemble algorithm and the Random Forest algorithm for predictive modeling. Machine Learning 24 123140 1996. Bankruptcy Prediction Using Machine Learning Nanxi Wang Journal of Mathematical Finance Vol7 No4 November 17 2017.

Using Iterated Bagging to Debias Regressions. The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. HALFHALF BAGGING AND HARD BOUNDARY POINTS.

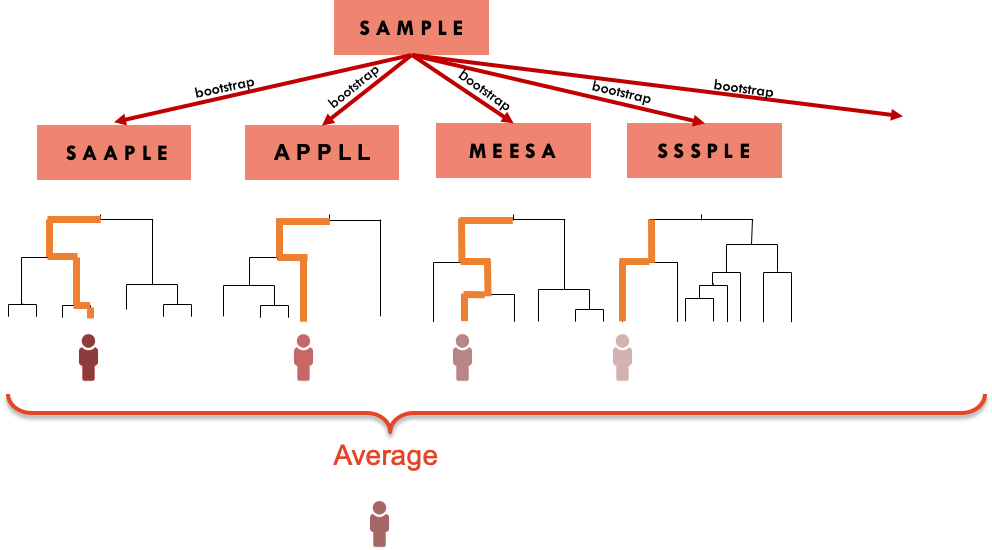

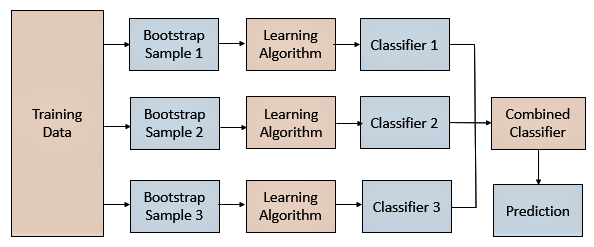

As its name suggests bootstrap aggregation is based on the idea of the bootstrap sample. Machine Learning Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. Bootstrap aggregating also called bagging is one of the first ensemble algorithms 28 machine learning practitioners learn and is designed to improve the stability and accuracy of regression and classification algorithms.

Bootstrap Aggregation or Bagging for short is an ensemble machine learning algorithm. After reading this post you will know about. However when the relationship is more complex then we often need to rely on non-linear methods.

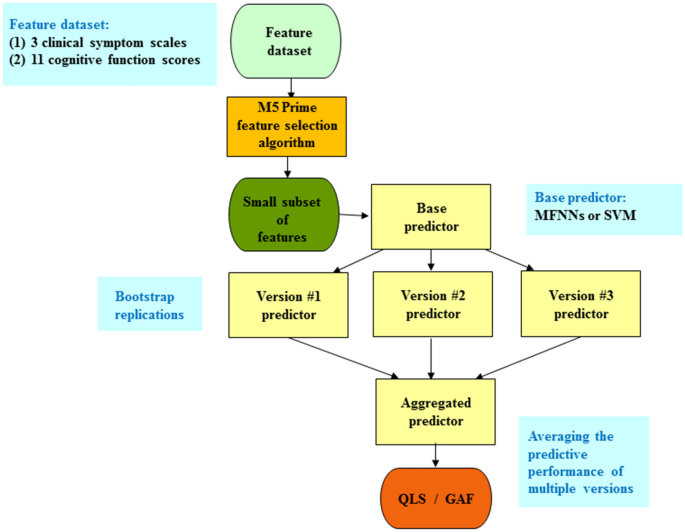

We hypothesized that our bagging ensemble machine learning method would be able to predict the QLS- and GAF-related outcome in patients with schizophrenia by using a small subset of selected clinical symptom scales andor cognitive function assessments. Up to 10 cash back Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. Berkele CA 94720 leostatberkeleyedu Editor.

For a subsampling fraction of approximately 05 Subagging achieves nearly the same prediction performance as Bagging while coming at a lower computational cost. It also reduces variance and helps to avoid overfitting. Random Forest is one of the most popular and most powerful machine learning algorithms.

The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. Bagging and Boosting in Machine Learning.

Bootstrap aggregating also called bagging from b ootstrap agg regat ing is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regression. Bagging Predictors LEO BBEIMAN Statistics Department University qf Callbrnia. Bagging in Machine Learning when the link between a group of predictor variables and a response variable is linear we can model the relationship using methods like multiple linear regression.

Bagging uses a base learner algorithm fe classification trees ie. If you want to read the original article click here Bagging in Machine Learning Guide. The diversity of the members of an ensemble is known to be an important factor in determining its generalization error.

Cited by 2460 23989year BREIMAN L 1998. Cited by 15 182year BREIMAN L 2001. Bagging Predictors By Leo Breiman Technical Report No.

In bagging a random sample of data in a training set is selected with replacementmeaning that the individual data points can be chosen more than once. Ensemble models combine multiple learning algorithms to improve the predictive performance of each algorithm alone. Bagging Predictors o e L eiman Br 1 t Departmen of Statistics y ersit Univ of California at eley Berk Abstract Bagging predictors is a metho d for generating ultiple m ersions v of a pre-dictor and using these to get an aggregated predictor.

The post Bagging in Machine Learning Guide appeared first on finnstats. Bootstrap aggregation or bagging is an ensemble meta-learning technique that trains many. We see that both the Bagged and Subagged predictor outperform a single tree in terms of MSPE.

It is a type of ensemble machine learning algorithm called Bootstrap Aggregation or bagging. An Introduction to Bagging in Machine Learning When the relationship between a set of predictor variables and a response variable is linear we can use methods like multiple linear regression to model the relationship between the variables. Specifically it is an ensemble of decision tree models although the bagging technique can also be used to combine the predictions of other types of models.

Ensemble methods like bagging and boosting that combine the decisions of multiple hypotheses are some of the strongest existing machine learning methods. This paper presents a new Abstract - Add to MetaCart. Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset.

Bagging is a technique used in ML to make weak classifiers strong enough to make good predictions. Every predictor is generated by a different sample genereted by random sampling with replacement from the original dataset. There are two main strategies to ensemble models bagging and boosting and many examples of predefined ensemble algorithms.

Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. Cited by 54 654year BREIMAN L 1998. It is used to deal with bias-variance trade-offs and reduces the variance of a prediction model.

Reporting Of Prognostic Clinical Prediction Models Based On Machine Learning Methods In Oncology Needs To Be Improved Journal Of Clinical Epidemiology

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

![]()

Ensemble Learning Bagging And Boosting In Machine Learning Pianalytix Machine Learning

2 Bagging Machine Learning For Biostatistics

An Introduction To Bagging In Machine Learning Statology

Applying A Bagging Ensemble Machine Learning Approach To Predict Functional Outcome Of Schizophrenia With Clinical Symptoms And Cognitive Functions Scientific Reports

Bagging Bootstrap Aggregation Overview How It Works Advantages

Procedure Of Machine Learning Based Path Loss Prediction Download Scientific Diagram

What Is Bagging In Machine Learning And How To Perform Bagging